Purpose Built Generative AI systems.

Positron

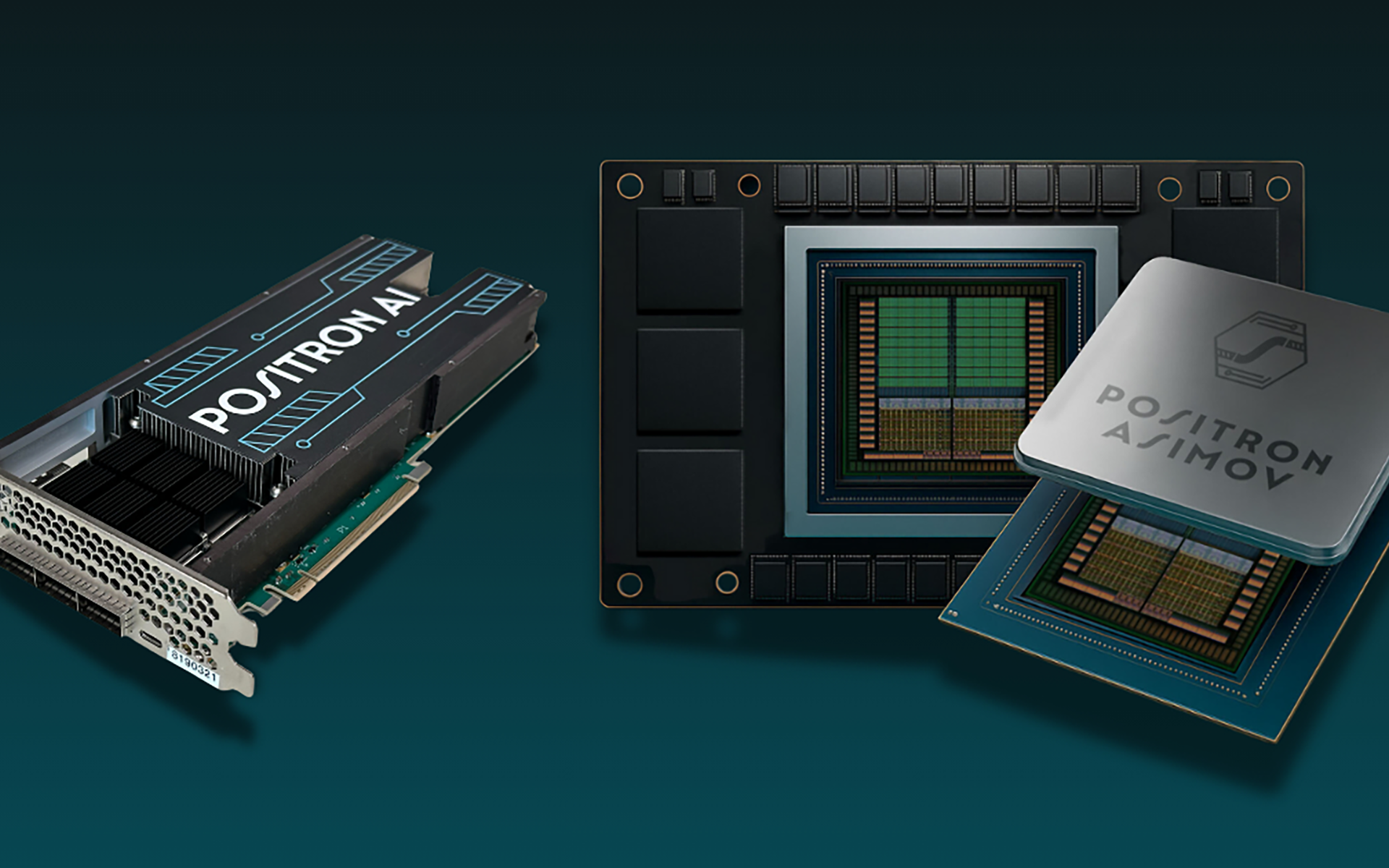

Purpose‑built silicon that slashes the cost and power of transformer inference.

Purpose‑built silicon that slashes the cost and power of transformer inference.

Positron

Generative‑AI adoption now hinges on inference economics: every cent per 1,000 tokens and every watt per rack dictates margin. GPUs were never architected for this workload at scale. Positron ships drop‑in accelerators that deliver up to 3–5x better performance‑per‑dollar than the latest data‑center GPUs today (Atlas FPGA) and is on track to reach 10x with its 2026 Asimov ASIC—unlocking mass‑market AI services while halving energy use.

When tokens are measured in the trillions, pennies compound into billions. Positron provides the cost curve advantage cloud providers and enterprises need to keep AI growth profitable.

IMPACT STORY

Positron develops purpose-built silicon for highly specialized workloads—think super intelligent frontier models, trillion-plus parameter LLMs, vision transformer models. They co-design their chips with the systems that need them, eliminating overhead down to the gate level. No speculative execution, no bloated memory hierarchy, no general-purpose tax. Atlas (shipping) is an FPGA appliance that slots into existing racks; Asimov (tape‑out 2026, production 2026) is a 2TB, >4 TB/s bandwidth chip delivering under‑400 W peak power (completely feasible to air cool). Both expose an OpenAI‑compatible API, so customers swap endpoints—no model retraining or quantization required.

In practice, this means:

• Cheapest cost per generation (whether that may be a token, image or video)

• 80 % lower energy and 2–4x rack density for data centers

• Deterministic latency (<5 ms @ 8K tokens) for real‑time AI applications

• Seamless deployment: upload .pt /.safetensors, update endpoint, go live

Positron isn’t building chips for everyone—just the hardware every inference provider wishes they had if they could design from scratch.

Why it Matters

The frontier is moving toward physical systems: robotics, AR, aerospace, infrastructure, energy. In these worlds, you don’t get to throw more cores at the problem. You don’t get to “scale up.” You design down—to the metal. The problem space demands hardware-native thinking, and the current ecosystem still assumes software-first abstractions. Inference spend alone is forecast to rise from ~$106 B in 2025 to ~$255 B in 2030 (19 % CAGR). GPU scarcity and energy cost are the cap on that growth curve. Positron removes the bottleneck, enabling cloud vendors to offer everyday‑low‑price AI services and capture share from hyperscalers.

As the world fragments into workload-specific domains, ASICs aren’t a nice-to-have—they’re the enabler. Positron is part of a coming wave of silicon-native companies, where compute is no longer a constraint, but a competitive weapon.

Founder Alignment

The Positron team has built silicon that’s shipped into data centers, spacecraft, and classified systems. They’ve wrestled with toolchains, floorplanning, verification, and bring-up under real-world pressure. They understand the physics, the economics, and the pain. Most important: they know what not to build.

The Bet

We backed Positron because they design from physical first principles—not compiler assumptions. In a world where founders are rewriting reality—across autonomy, sensing, control, and energy—Positron gives them the compute that actually lets them deploy it.

This isn’t Moore’s Law. It’s Murphy’s Law, handled in hardware.

Greater Performance

Lower Power

Lower Capex Cost. (*compared to NVIDIA's H100/H200 systems.)